Fog Computing

Definition

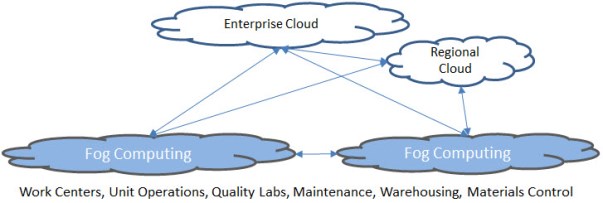

"Fog Computing" is a highly distributed broadly decentralized "cloud" that operates close to the operational level, where data is created and most often used. Fog computing at the ground-level is an excellent choice for applications that need computing near use that is fit for purpose, where there is high volume real-time and/or time-critical local data, where data has the greatest meaning within its context, where fast localized turn around of results is important, where sending an over abundance of raw data to an enterprise "cloud" is unnecessary, undesireable or bandwidth is expensive or limited. Example applications of fog computing within an industrial context are analytics, optimization and advanced control at a manufacturing workcenter, unit-operation, across and between unit-operations where sensors, controllers, historians, analytical engines all share data interactively in real-time. At the upper edges of the "fog" is local site-wide computing, such manufacturing plant systems that span workcenters and unit operations, higher yet would be regional clouds and finally the cloud at the enterprise level. Fog computing is not independent of enterprise cloud computing, but connected to it sending cleansed summarized information and in return receiving enterprise information needed locally.

Fog computing places data management, compute power, performance, reliability and recovery in the hands of the people who understand the needs; the operators, engineers and IT staff for a unit operation, an oil and gas platform, or other localized operation, so that it can be tailored for "fit-for-purpose" in a high speed real-time environment.

Fog computing reduces bandwidth needs, as 80% of all data is needed within the local context, such as; pressures, temperatures, materials charges, flow rates. To send such real-time information into the enterprise cloud would be burdensome in bandwidth and centralized storage. Enterprise data base bloat would occur for information rarely used at that level. In this way a limited amount of summarized information can be transmitted up to the cloud and also down from the cloud to the local operation, such as customer product performance feedback to the source of those products.

Architecture: Real-Time Large Scale Distributed Fog Computing

We place computing where it is needed, and performant, suited for the purpose, sitting where it needs to be, at a workcenter, inside a control panel, at a desk, in a lab, in a rack in a data center, anywhere and everywhere, all sharing related data to understand and improve your performance. While located throughout your organization, a fog computing system operates as a single unified resource, a distributed low level cloud that integrates with centralized clouds to obtain market and customer feedback, desires and behaviours that reflect product performance in the eyes of the customer. The characteristics of a fog computing system are:

- A Highly Distributed Concurrent Computing (HDCC) System

- A peer-to-peer mesh of computational nodes in a virtual hierarchical structure that matches your organization

- Communicates with smart sensors, controllers, historians, quality and materials control systems and others as peers

- Runs on affordable, off the shelf computing technologies

- Supports multiple operating platforms; Unix, Windows, Mac

- Employs simple, fast and standardized IoT internet protocols (TCP/IP, Sockets, etc.)

- Browser user experience, after all, it is the key aspect of an "Industrial Internet of Things"

- Built on field-proven high performance distributed computing technologies

Capturing, historizing, validating, cleaning and filtering, integrating, analyzing, predicting, adapting and optimizing performance at lower levels across the enterprise in real-time requires High Performance Computing (HPC) power. This does not necessarily mean high expense, as commercial off the shelf standard PCs with the power of a typical laptop computer will suffice and the software running the system need not be expensive.

To architect such a system, we draw upon the experiences, architectures, tools and successes of such computing giants as Google, Amazon, YouTube, Facebook, Twitter and others. They have created robust high performance computing architectures that span global data centers. They have provided development tools and languages such as Google's GO (golang) that are well suited for high speed concurrent distributed processing and robust networking and web services. Having a similar need, but more finely distributed, we can adopt similar high performance computing architectures to deliver and share results where they are needed in real-time.

Pulling It All Together

And so by standing on the shoulders of giants, and by drawing on our decades of experience in industrial analytics, we are assembling the "Industrial Analytics of Things" in a "Fog Computing" cloud architecture to deliver outstanding performance for your organization.

Join us in this journey. We are actively seeking commercial / industrial alpha and beta testers. Be a part of something new and exciting! Give us a call at 1-281-760-4007 or send us an email

Join Us!

Join us in this journey.

We are actively seeking commercial / industrial alpha and beta testers. Be a part of something new and exciting!

To learn more, give us a call at 1-281-760-4007 or send us an email